Serialization transforms an object into a byte-stream so it can be moved out of a process either for persistence or to be sent to another process. And de-serialization is the reverse process that transforms a byte-stream back into an object.

And, unlike a stand-alone cache, a distributed cache must serialize objects so it can send them to different computers in the cache cluster. But, the serialization mechanism provided by .NET framework has two major problems:

1. Very slow: .NET Serialization uses Reflection to inspect type information at runtime. Reflection is an extremely slow process as compared to precompiled code.

2. Very bulky: .NET Serialization stores complete class name, culture, assembly details, and references to other instances in member variables and all this makes the serialized byte-stream many times the original object in size.

Since a distributed cache is used to improve your application performance and scalability, anything hampering this becomes very critical. And, the regular .NET Serialization is a major performance overhead in a distributed cache because thousands of objects need to be serialized every second before being sent to distributed cache for in-memory storage. And, any slowdown here becomes a slowdown for the distributed cache.

The other issue is that a bulky serialized byte-stream consumes 2-3 times extra space and reduces the overall storage capacity of a distributed cache. An in memory storage can never be as big as a disk storage which makes this an even more sensitive issue for a distributed cache.

To overcome .NET serialization problems, NCache has implemented a Compact Serialization Framework. In this framework, NCache stores two-byte type-ids instead of fully qualified assembly/class names. It further reduces the serialized byte-stream by only serializing the field values and excluding their type details. Finally, NCache Compact Serialization Framework avoids the use of .NET Reflection because of its overhead by directly accessing fields and properties of the instance object.

There are two ways to use NCache Compact Serialization in your application.

- Let NCache generate Compact Serialization code at runtime

- Implement an ICompactSerializable interface yourself

In this blog, I will stick to first approach only. I’ll discuss the second approach in a separate blog.

Let NCache generate Compact Serialization code at runtime

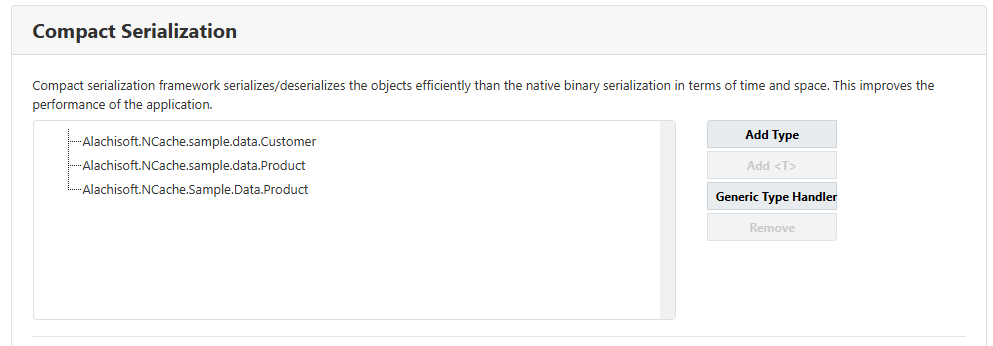

Identify the types of objects you are caching, and register them with NCache as Compact Serialization types as shown in Figure. That is all you have to do, and NCache takes care of the rest.

Figure 1: Register Types for Compact Serialization with NCache

NCache sends the registered types to NCache client at the time of initialization. Based on received types, NCache client generates the runtime code to serialize and deserialize each type. The runtime code is generated only once by the NCache client at the time of initialization and used over and over again. It runs much faster than Reflection based serialization.

Hence, using NCache Compact Serialization you can efficiently utilize your distributed cache memory and can boost your application performance.

So, download a fully working 60-day trial of NCache Enterprise and try it out for yourself.

Hi,

We do understand that you have put together 2 options above. When would you use the Compact Serialization through IDE vs implementing the interface.

-Javed